Introduction¶

I have been reading and implementing specifications for a long time. I truly believed that I had seen with and dealt with every situation presented in any specification. I was therefore surprised when I started reading the GFM specification and I noticed an entry in the table of contents labelled Phase 2: inline structure. Thinking “if it is in the table of contents as a separate entry, it must be important”, I started reading that section, waiting for some big revelation to appear.

That Phase 2 section starts with an example which is an extension of the example used

in the preceding section in the document. After a bit of comparison with the

previous example, it should be obvious that the string \n was changed to softbreak

and the string "Qui *quodsi iracundia*" was changed to

str "Qui " emph str "quodsi iracundia". Going back to my article on

Autolinks, Raw HTML, and Line Breaks,

the translation from \n to the soft line break (or soft-break) is easy to see,

the inline parser code to perform that translation having just been implemented. And

looking at the provided example, emphasis looked like it was going to be easy. So why

include implementation notes on it if it was so easy?

On the surface, the translation of emphasis markers to emphasis would seem to be easy to understand, if not for the start of the next section of Phase 2:

By far the trickiest part of inline parsing is handling emphasis, strong emphasis, links, and images. This is done using the following algorithm.

I was kind of skeptical, but I continued reading… and reading… and reading.

After a couple of thorough reads of the section in the GFM specification on

Emphasis and strong emphasis,

I started to understand why emphasis is not as easy as it seems. At its root it is

pretty simple: use one * for emphasis and ** for strong emphasis. No problems

there. If you need a subtle change in the emphasis rules, primarily around intra-word

usage and use around punctuation, use _ instead of *. Okay…why do I need so many

rules?

Isn’t it as easy as this?

Isn't it *as easy* as **this**?

It took a bit more reading, but for me, it was some questions from the preface of the specification that made me understand. The big revelation finally hit me in the form of those questions and the ambiguity that they raised.

How are the following cases from the specification of strong emphasis interpreted?

***strong emph***

***strong** in emph*

***emph* in strong**

**in strong *emph***

*in emph **strong***

How about the following cases of nested emphasis?

*emph *with emph* in it*

**strong **with strong** in it**

And finally, how about intra-word emphasis?

internal emphasis: foo*bar*baz

no emphasis: foo_bar_baz

For most of those examples, I could come up with at least 2-3 different ways that they could be parsed. As such, it is probably a good idea that there are 17 rules to help with emphasis. But before I could work on the implementation, I needed to do some more research.

What Is the Audience For This Article?¶

While detailed more eloquently in this article, my goal for this technical article is to focus on the reasoning behind my solutions, rather that the solutions themselves. For a full record of the solutions presented in this article, please go to this project’s GitHub repository and consult the commits between 10 March 2020 and 13 March 2020.

Emphasis is Hard and not always Consistent¶

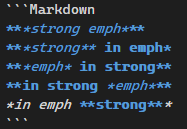

For any readers that have been following this series, it might be apparent that I have veered away from my usual habits. Usually I label all of my Markdown code blocks properly to allow my static site generator to take care of the proper coloring. However, for the above examples, I just marked them as normal text blocks without any language specified. This was not a mistake; it was a conscious decision.

When I started entering the fenced code

block for the text, everything looked normal. However, once I added the Markdown

language tag at the start of the block, that all changed. What I saw in my VSCode

editor was the following:

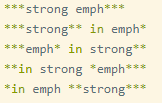

Publishing the page and looking there, I saw that same text rendered as:

And finally, running it through my favorite GFM test link, the following was generated:

strong emph

strong in emph

emph in strong

in strong emph

in emph strong

Three different platforms and three different ways to interpret the emphasis. To me, it was then that it was apparent why a consistent way to implement emphasis was needed in order to produce consistent results.

Utilizing the Wisdom of Others¶

Instead of trying to figure everything out myself, tempting as that can be sometimes,

I decided to implement the algorithm as stated in the GFM specification with one small

change: no links for now. To accomplish this, I kept to the algorithm’s recipe as

described, but I left the implementation of the look for link or image section for

later. My plan was to focus on the emphasis itself before moving on to

links, which I still believe was a very good decision on my part.

In looking at the algorithm through a bit of a sideways lens, I observed that there were two main tasks that the algorithm accomplishes: building the delimiter stack and resolving that same delimiter stack. Without taking a two-phase approach to the algorithm, I determined that I would be multitasking two separate objectives, and not doing either of them very well. I just felt strongly that it was better to split the task in two and focus on each one until it’s completion. In the end, I was going to finish both part of the algorithm, so if both tasks were done, emphasis would be implemented.

The first task: building the delimiter stack.

Building the Delimiter Stack¶

Taking a high-level look at the algorithm, I came up with the following two

observations. In cases where a delimiter is not used in a special way, it needs to be

represented by a simple text node. Where it is used in a special way, it may need to

be removed and properly replaced with the tokens that the delimiters represent. The

“may” part of that last statement is there as, after looking at some of those examples,

it may be possible to have a case where the parser only uses some of the emphasis

delimiters, but not all of them. Once such example is the string this **is* my text.

To accomplish this, I created a new class SpecialTextMarkdownToken that is a child

of the TextMarkdownToken class. In cases where the delimiter is not completely

consumed, this allows the new token to be easily consumed by existing code, without

any modifications needed. Supporting the additional parsing requirements, the new

SpecialTextMarkdownToken tokens adds the repeat_count, active, preceding_two,

and following_two fields, enabling the algorithm to be implemented properly.

Finally, but most importantly, to ensure the algorithm works properly, that new token

is added to the delimiter stack.

To properly test that I had produced the correct tokens and delimiter stack, I started by enabling one of the emphasis tests and examining the resulting tokens. I compared those tokens against the tokens that were there before, looking for changes that I expected to be induced by the presence of the emphasis delimiters. As I was only building the delimiter stack at this point in the process, this was easy to verify.

A solid example of this is the emphasis test for

example 364,

which is the example that I selected as my initial test. That example contains the

string foo*bar* to test against and is a pretty easy example. Before the delimiter

stack was built, I verified that the string was represented by a single

TextMarkdownToken token containing the string foo*bar*. After the delimiter stack

was built, that same string was represented by 4 tokens:

- a

TextMarkdownTokentoken with the stringfoo - a

SpecialTextMarkdownTokentoken with the string* - a

TextMarkdownTokentoken with the stringbar - a

SpecialTextMarkdownTokentoken with the string*

In addition, the delimiter stack contained both SpecialTextMarkdownToken

tokens in the order that they appear. At this point in the process, that was the

expected token output, and I was happy to see it work so well.

As one good test was not solid proof to me, I continued to make sure that I had decent results after building the delimiter stack by repeating this examination with 5-6 other tests. While there were a couple of small issues along the way, I was able to quickly get to the part where I had a high degree of confidence that the delimiter stack was correct. Now it was on to the resolution of that stack.

Resolving the Delimiter Stack¶

Skipping past the look for link or image section of the GFM specification, I sat down

with my headphones on and poured over the section on process emphasis a couple of

times, taking notes as I went. I then went back to the

emphasis scetion

of the GFM and started pairing each of the 17 rules to each note that I made and was

happy to find a 1-to-1 mapping between the two lists.

And yes, that is right… 17 rules. It did take a while, but I validated each step

in the specification against each of the rules, making detailed notes as I went. While

it was slow to emerge, by the time I reached the last rule I had a plan to follow.

To implement each rule, I would implement the tests in order of the rules that they

support, with a slightly irregular start order. Basically, instead of starting with a

“1 2 3 4” order, I started with a “3 1 4 2” order. The reason for this weird ordering

is because the algorithm starts by identifying eligible closing emphasis, then trying

to locate a matching start emphasis. To accommodate that, I decided to start with the

close and start pair for the normal emphasis (* character) following that same pattern

with the strong emphasis (_ character).

While I was thankful for the examples in the GFM specification before implementing emphasis, having the emphasis examples, rules, and examples-by-rule-groups made me even more thankful. Starting with rules 3 and 1, I was able to get solid code written to implement what was needed to support those rules. The next two rules, rules 4 and 2, were added using the first two rules as a building block. For the remaining 13 rules, I just felt that each rule and the examples to support it just naturally flowed, each one adding another small piece of the puzzle. While it did take some time to get all 17 rules implemented and the tests for all 130 examples passing, it all felt like just another step. It was a nice feeling.

Emphasis - The Base Cases¶

As I progressed with the rules, it was nice to see the natural progressing in the sequences that I was enabling for the parser. This progression was so natural and straightforward, I want to take the time to show how things progressed over the course of the 17 rules. The truth is that when I was finished, it felt more like 4-5 rules than 17 rules.

In order of enablement, first there were the simple sequences enabled in rules 1 to 4:

This is *my* emphasis.

<p>This is <em>my</em> emphasis.</p>

This is my emphasis.

Rules 5 to 8 added strong emphasis:

This is *my* **strong** emphasis.

<p>This is <em>my</em> <strong>strong</strong> emphasis.</p>

This is my strong emphasis.

Rules 9 and 10 added clarity on how to handle nested emphasis:

*foo**bar**baz*

<p><em>foo<strong>bar</strong>baz</em></p>

foobarbaz

Rules 11 and 12 add clarity on escaping delimiters and how excess delimiters are handled:

***foo**

<p>*<strong>foo</strong></p>

*foo

Emphasis - Resolving the Ambiguity of Complex Cases¶

Using just the prior rules, there were many representations that could be inconsistent from parser to parser. These rules are used to resolve that ambiguity. As these rules resolve ambiguity, I am only showing the HTML output in a code block instead of showing the HTML output in a code block and the actual HTML itself.

Rule 13 adds the concept that one <strong> is preferred to <em><em>:

******foo******

<p><strong><strong><strong>foo</strong></strong></strong></p>

Rule 14 adds the concept that the <strong> tags should always deeper than the <em>

tags, if used together:

***foo***

<p><em><strong>foo</strong></em></p>

Rule 15 adds the concept that in a case of overlapping emphasis spans, the emphasis span that ends first overrides the interpretation of the second possible emphasis span as an actual emphasis span.

*foo _bar* baz_

<p><em>foo _bar</em> baz_</p>

Rule 16 adds the concept that if two emphasis spans have the same closing delimiter, the shorter of those two emphasis spans is interpreted as an actual emphasis span.

**foo **bar baz**

<p>**foo <strong>bar baz</strong></p>

Rule 17 adds a clarification that number of inline elements are more tightly grouped that emphasis spans, meaning that the parsing of those inline elements takes precedence over the parsing of the emphasis spans.

*a `*`*

<p><em>a <code>*</code></em></p>

Emphasis - Rule Summary¶

Without implementing a Markdown parser, it might be hard to appreciate how natural the process of building the emphasis support into the parser was. But please believe me, it was a thing of beauty. The best way to describe it was that as I was implementing each section, I jotted down notes for myself that usually started with “what about…”. It was usually within a rule or two that I scratched off that note as it was no longer an issue. By the time I got to the end of the emphasis implementation, all the notes were scratched off. That kind of progression when implementing a specification are rare, and it was just wonderful to witness.

What were the bumps on the road?¶

During the implementation to support inline emphasis, other than “fat man finger” typing errors, there were only a couple of issues that impeded its addition to the parser. The first was because I got impatient and tried to implement one of the rules ahead of time and the second was caused by a subtle change I made to the algorithm and the side effects that I needed to abate. Other than those two issues, the development just flowed easily from the rules and their examples.

The impatience issue occurred when I was trying to take care of a side-effect of rule 11, but I was trying to take care of it when implementing rule 1. It sidetracked me for a couple of hours without any resolution. It was not until I double checked that it was indeed part of rule 11 that I abandoned it until later. The “funny” thing is that when I did get to implementing rule 11 in the proper order, it was implemented with almost no friction in less than 5 minutes. Because of the way the rules build on each other, in hindsight it makes perfect sense that I ran into issues trying to implement part of rule 11 at the beginning.

The change issue was caused by a subtle change that I made to the algorithm to better

deal with Python’s data structures. To simplify the implementation in Python, the

references in the

delimiter stack were made with indices (or “indexes” for Americans) instead of pointers.

In the “if one is found” section of the algorithm there are two cases where the

algorithm calls for the removal of nodes from the delimiter stack. Instead of removing

the elements of the delimiter stack and recomputing the indices, I just altered the

algorithm to work better with the active field. While this did cause a few

small issues that needed to be resolved, in the end I believe it still was a better

course of development to take.

What Was My Experience So Far?¶

Having taken time to do my research and to have a solid plan emerge, it was nice to see that the plan paying off after the code for the first couple of rules were implemented. Often when you plan an approach for a project, in your head you always think things like “I’ll have to switch to plan B if I see…”. In this case, while I still thought that, I was able to stick with my first plan the entire way through. That was nice and gratifying.

I also cannot stress how impressed I am with the authors of the GFM specification and the effort they took to specify the emphasis elements. Not only did they work hard to resolve any ambiguity with 17 rules, but they provided a solid road map for implementers to follow with a suggested approach. For me, the beauty of those two parts of the specification is how they weave together, each one reinforcing the other one. In its simplest form, they took something that I was sure was going to be a headache and made it very easy to implement correctly.

Basically, I started this part of the parser with a feeling of dread, thinking that I would plan an approach and switch to plan B, or even to plan F. But with some good planning on the behalf of the authors of the GFM specification, it went off without any major issues. It was cool to see that happen.

What is Next?¶

Having had a very smooth time implementing emphasis, it was very tempting to just dive in and tackle all of the link support at the same time. However, in looking at the different types of links in the specification, I decided that inline links would be a good place to start. That is where I will pick up in my next article!

Comments

So what do you think? Did I miss something? Is any part unclear? Leave your comments below.