Summary¶

In my last article, I focused on refactoring that was performed to clean up the code base of the PyMarkdown project. In this article, I talk about how I started to learn about finding and implementing performance improvements in the PyMarkdown project using cPython and SnakeViz.

Introduction¶

While I have mentioned before that I was starting to investigate performance measurements for the project, it was time to bring those measurements and my observations on those measurements into the light. Based on initial measurements, I knew there was a big performance degredation going on with the logging library and my use of it. If I wanted the PyMarkdown project to be even remotely performant, I knew I needed to deal with at least that performance refactoring. At the same time, as I knew I would need to apply other performance improvements on the project down the road. So, I just figured it was a good time to learn about the analyze-deduce-change cycle of performance metrics and how to perform it with a Python program.

Basically, I needed to spend some time learning how to properly measure and improve the performance of the PyMarkdown project. I was stoked!

What Is the Audience for This Article?¶

While detailed more eloquently in this article, my goal for this technical article is to focus on the reasoning behind my solutions, rather that the solutions themselves. For a full record of the solutions presented in this article, please go to this project’s GitHub repository and consult the commits between 03 Feb 2021 and 13 Feb 2021.

Dipping My Toes Into the Water¶

Having upgraded the PyMarkdown project to Python 3.8, I was able to use two Python tools that I was eager to learn from: cProfile and SnakeViz. The best way to think about these tools is that cProfile allows the Python interpreter to collect information on what happened during the execution of a program and SnakeBiz is a useful way to present that information. While I have tried to use cProfile before to analyze this project, I found it too cumbersome to read effectively. For my use, I needed a GUI display that I could more easily navigate through. For me, SnakeViz provided just that interface.

Getting Started¶

Getting started using these tools was easy. In the project’s home directory, I

have a script, named run_blog.cmd, that I use to run the PyMarkdown project over all

my articles for this site. This script is very basic and very simple:

pipenv run python main.py --stack-trace -d MD018,MD020 ../blog-content/website/content/articles/SoftwareQuality

So, I copied that script to a file named prof_blog.cmd and changed the contents

of the script file to:

pipenv run python -m cProfile -o p0.prof main.py --stack-trace -d MD018,MD020 ../blog-content/website/content/articles/SoftwareQuality

That was it! Nothing else was needed. Running that new script, a new p0.prof file

was deposited in the project directory. To save time, I created a new file (with yet

another imaginative name) named show_profile.cmd and place the following text into

that file:

pipenv run snakeviz p0.prof

Running that script, it was obvious that it started a webserver on the command line, and then proceeded to open up a new tab in my browser to show me the results on.

Looking For The Obvious Starting Point¶

I will not go into how to use SnakeViz, as I believe the SnakeViz homepage does a real good job of walking users through the required steps. It was presenting a lot of information, and I needed to find a way to focus and walk through the data. While I did confirm that I need a GUI to help me walk through the data, it was not the graphs that were helping me out. It was being able to click on various parts of the tables and dig down into the performance of the items that I clicked on. That is what really helped me understand things!

After getting more comfortable with the output, I cleaned everything up and reran the

prof_blog.cmd script, followed by the show_profile.cmd script and was greeted

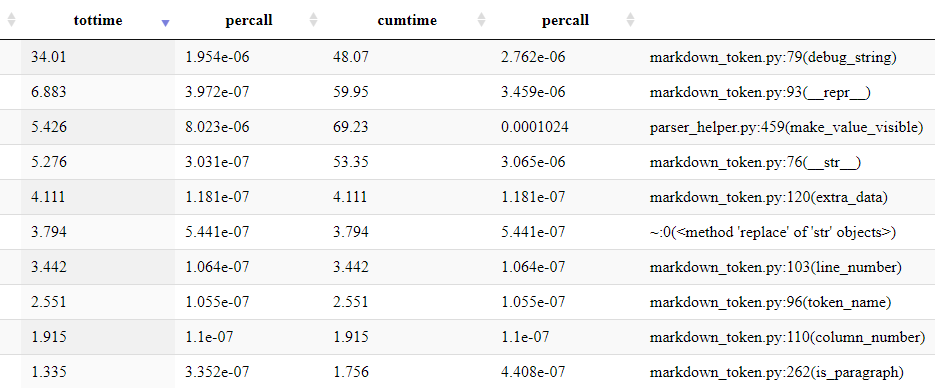

with this information:

As this learning was being done in the background as I was working on the project, I let

that picture sink in for a bit while I thought about the implications in my spare time.

But the results were obvious to me. Based on what I

was seeing, the first 5 items in the performance list were taking 54 seconds of execution

time, with none of those items having anything to do with the processing. That time was

being spent processing all the debug information that I had in place to help me diagnose

issues. But as far as I knew, with the project at its default settings, the project was

using the WARN debug level, and should not be outputting anything

to the logs. As it should not be outputting anything to the logs, it logically

followed that it should not be creating debug strings that are used to place information

in those logs. I was stumped.

Another Try With A Fresh Mind¶

After fixing some issues in the project, I returned to this problem with a fresh mind. This time, as I looked over the code, I noticed the issue almost right away. While the information was not being output to the logs, it was being prepared and formatted assuming it was being added to the logs. A good example of this is this snippet of code:

LOGGER.debug(

"coalesce_text_blocks>>>>%s<<",

ParserHelper.make_value_visible(first_pass_results[coalesce_index]),

Whether the information gets logged or not, the interpreter is going to go

through the process of preparing the string passed as a parameter into the debug

function. In this case, it is going to dereference the first_pass_results list

using the index coalesce_index and passing that into the

ParserHelper.make_value_visible function. Yup, that is the same make_value_visible

function that is in the number 3 position on the above list. To make matters worse,

the first_pass_results list contains tokens that made it through the first pass of

parsing. This means that the debug_string function of the MarkdownToken class is

being called for the token inside of the make_value_visible. And yes, that is the

same debug_string that is in the number 1 position of that same list.

Basically, every time that line is hit, the interpreter prepares the data, whether it is

needed or not. For this line, that contributes to the debug_string function being in

position 1 of that list and the make_value_visible function being in position 3 of that

same list. It was then that I knew that I needed to find a way to log the information

but delay evaluation of any parameters passed to it until the logger knows that it will be

logging that information.

The Benefits Of Experience¶

Having faced issues like this before, I had some ideas on how to proceed, I just did not know if Python supported any of them. The best approach that I had in my developer’s toolkit was to use a Facade pattern to create a class that would handle any transformations of the parameters that I required, but only apply those transformations if the information was actually going to be logged.

Throwing something together quickly, I came up with the following:

class ParserLogger:

def __init__(self, my_logger):

self.__my_logger = my_logger

def debug(self, log_format, *args):

if self.__my_logger.isEnabledFor(DEBUG):

msg = self.__munge(False, log_format, args)

self.__my_logger.debug(msg)

@classmethod

def __munge(cls, log_format, args):

split_log_format = log_format.split("$")

recipient_array = [None] * (len(split_log_format) + len(args))

for next_array_index in range(0, len(recipient_array)):

if next_array_index % 2 == 0:

recipient_array[next_array_index] = \

split_log_format[int(next_array_index / 2)]

else:

recipient_array[next_array_index] = \

ParserHelper.make_value_visible(

args[int(next_array_index / 2)])

return "".join(recipient_array)

Hopefully, the __init__ function and the debug functions are self-explanatory as they

were the simplest parts of the class to write. The rest took a bit of work to get working

right, but it was worth it. Instead of passing in logger-formatted strings, I decided to

use my own formatting. Therefore, in place of using the %s sequence to key a

substitution as with normal logging

calls, I opted for the simpler $ sequence. For debugging uses, I do not want to worry

about what type of object is being passed or making sure it is cast to a string type.

That is why I created the make_value_visible function. But to seamlessly incorporate

those requirements together was going to take some work.

The first thing that I needed to do was to create a list that would hold the information.

This meant that I had to have enough space for each part of the log_format string

between $ characters and for each argument passed into the function.1 With that

list created and initialized to None instances, I then needed to populate that

list. The function iterates from 0 to the index that is 1 less than the size of the

list. When the index is an even number (if next_array_index % 2 == 0:), the next item

is populated with the information from the log_format string split on the $

character. When the index is odd (the else clause), the next item is populated with the

results of the next argument being processed through the make_value_visible function.

Essentially, between the module operator % and the divisor operator /, the loop

takes elements from each list, alternating which list it is taking a value from.

For me, the important part to concentrate on is that the formatting of the information,

including the call to the make_value_visible function, is only done after the debug

function has determined that debug logging is enabled. If it is not enabled, the only

overhead was calling the function with the specified parameters and the if statement at

the start of the debug function. For me, that was ideal!

And to be honest, in the first couple of iterations, I had to work on how the list was populated with the information from the other two lists. In the end, the modulo approach was the most efficient and most tidy, so it won out. I tried to be fancy with how I was populating the lists, but they did not pan out. The clear winner was the above solution that used little code and basic high school math.

Almost There¶

It only took a couple of hours to come up with the ParserLogger prototype, which was

encouraging. Writing a couple of simple throwaway scenario tests, I was able to verify

that it was working properly almost right away. There was just one small problem.

The source of the information.

When any good logger logs information, it includes information that describes where the log information was submitted from. For example, in its default configuration, the Python logging library output for our sample logging line is:

DEBUG pymarkdown.coalesce_processor:coalesce_processor.py:26 coalesce_text_blocks>>>>[text(4,1):end:]<<

But with the current implementation of the ParserLogger class, the same logging location

was being logged for each line of information: the line in the ParserLogger class where

the lower-level logger was being called. From my experience, I expected this, so I turned

to the

Python logging documentation

to look for something very specific. On every platform where I have seen it supported,

the thing I was looking for was a parameter to the debug function that, on logging

packages where it is supported, always had “stack” in the title. This information helped

me to weed out parameters that were not helpful, landing on: stacklevel. Directly from that documentation:

The third optional keyword argument is stacklevel, which defaults to 1. If greater than 1, the corresponding number of stack frames are skipped when computing the line number and function name set in the LogRecord created for the logging event. This can be used in logging helpers so that the function name, filename and line number recorded are not the information for the helper function/method, but rather its caller. The name of this parameter mirrors the equivalent one in the warnings module.

Loggers are not a new feature in programming languages, and logging helpers are almost as old as the logging features in those programming languages. It stood to reason that Python would have support for this, but I was surprised to find out that it was only added in Python 3.8. Lucky for me, as the workarounds are workarounds that I would prefer not to resort to.

Armed with this information, I changed this line in the sample:

self.__my_logger.debug(msg)

to this line:

self.__my_logger.debug(msg, stacklevel=2)

and everything just worked. After that, I did a bit of cleaning up to make the class more production ready before applying it on a grand scale.

The Big Changeover¶

While this would not be last big performance changeover in the history of the project, it was the first. As changeovers go, it was easy to do but large in scope. I searched for the text:

LOGGER = logging.getLogger(__name__)

at the top of each file and replaced it with the text:

POGGER = ParserLogger(logging.getLogger(__name__))

From there, it was a simple manner to search through the files, looking for any instances

of LOGGER.debug and changing them to POGGER.debug. As I changed the module the name

of the variable from LOGGER to POGGER, I also looked for any instances of %s in the

string and transferred them over to the new $ syntax. Finally, if there were any calls

to str or make_value_visible, I removed them, leaving only the bare minimum required

to log the requested information.

A great example of this change is the sample logging line from above:

LOGGER.debug(

"coalesce_text_blocks>>>>%s<<",

ParserHelper.make_value_visible(first_pass_results[coalesce_index]),

which was changed to:

POGGER.debug(

"coalesce_text_blocks>>>>$<<", first_pass_results[coalesce_index],

)

Smaller, more compact, and no need to call the make_value_visible when passing in

the value. A win from many viewpoints!

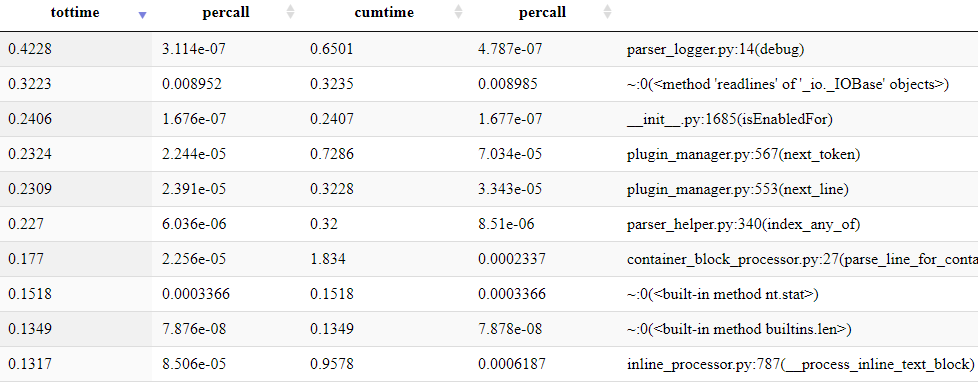

It was a long process, but I was able to check along the way to see if the scenario tests were still passing, correcting any errors as I went. This helped me by breakig up that long, boring task into smaller sections. Then, after a lot of search-and-replace work, I completed the last change and ran the performance metrics again, and I was greeted with this information.

Phew! All that work paid off. From experience, I knew I was very lucky to have something that was so obviously off balance as my first performance refactoring. I went from having the first 5 items in the performance list taking 54 seconds to a slightly different set of 5 items taking 1.446 seconds to complete. That was a win.

I want to stress again for any readers: this was luck. In my mind, there was a greater chance that I would be met with a different set of 5 items at the start of the list that were harder to improve. This was a slam dunk of a change because it was so obvious. Keeping that in mind, it was time to move on to other tasks.

About That Logger Name?¶

Oh… POGGER? Why POGGER? I thought that PERFORMANCE_LOGGER was too long. I

thought that PLOGGER was too short and I felt it might be confused with LOGGER on a

quick

read. After a couple of simple experiments, with me as the guinea pig, I was clearly

able to distinguish between POGGER and LOGGER in some test files. Because of the

different replacement sequence ($) and no need for calling str around non-string

parameter, I felt that having that distinguished difference was important.

Once Again, Link Reference Definitions¶

I wish I could say that I could keep away from these tokens, but as they are the most troublesome token, I guess I do not get my wish. During the refactoring that was documented in the last article, I suspected that things might not be good with Link Reference Definitions if they were inside of Fenced Code Blocks or HTML Code Blocks. Based on a couple of quick tests, those suspicions were confirmed. For some reason, the logic for preventing a Link Reference Definition from starting within one of those two blocks was either missing or not working properly. Time to debug!

Since this article is mostly about me learning about performance tuning in Python, I will not go into too many details. Let’s just say that the code that I thought I had in place to prevent that happening was not there. Even more than that, after working on it for a good solid four hours in the evening, I only got most of the scenario tests working, with nine scenario tests still left as disabled. As these scenarios are very much off the beaten path, I felt okay in marking them as disabled and handling them in the future.

Link Tokens and Image Tokens: The Gathering¶

While it probably sounded better in my head than when I typed it in, it did feel like a

cheesy name for an old 1980s series that was thankfully not produced. This task

was a simple one: as much as possible, merge the code to handle link tokens and image

tokens. The interesting thing was that this was already partially done. In a fair

number of areas, such as the __calculate_link_and_image_deltas function, there was

already code that handled both tokens:

if current_token.is_inline_image:

active_link_uri = current_token.image_uri

if current_token.pre_image_uri:

active_link_uri = current_token.pre_image_uri

...

else:

active_link_uri = current_token.link_uri

if current_token.pre_link_uri:

active_link_uri = current_token.pre_link_uri

...

It just made sense to make it official and merge them instead of having this artifical divide between the two tokens.

The first part was relatively easy. Starting with a new base class for both tokens, I

carefully moved any member field that had the exact same name in both classes to the base

class. At that point, there were only two fields that needed special attention and one

field that was distinct to the Image token: the image_alt_text field. The two fields,

image_uri and image_title were renamed to meet the Link token counterparts, link_uri

and link_title, with a global search and replace taking care of any occurrences of the

old name.

But I was not done yet! I had also wanted to simplify calls to those pairs of fields for

quite some time. There are a couple of specific cases where the individual field is

needed and the link_title field is used. In some cases, the original form of the data

is needed and the pre_link_title field is used. To save space in the token, if both

fields have the same value, the pre_link_title field is set to None. So, to make it

easier to deal with that, I added the active_link_title property and the

active_link_url property to fetch the value that I want.

After all that work, the code above was replaced with this one line:

active_link_uri = current_token.active_link_uri

Single Call Special Character Resolution¶

This is another task that I have wanted to do for a while, and I am pleased

with how well it proceeded. Along the way, I have needed to define special character

sequences to deal with the dual representation of data in the token: actualized for the

HTML rendering and source for the Markdown rendering. To accommodate this representation,

to present the required aspect of that data, I have developed a series of functions in

the remove_* and resolve_* namespace of the ParserHelper class.

But as I have been looking at the code in the last couple of weeks, one thought occurred to me about the various invocations of the various functions to remove those characters. Did I really need specific functions called all the time, or could I just call a “remove everything” function most of the time and be safe?

To answer that question, I created the resolve_all_from_text function and the

remove_all_from_text function. For each function, I located what I thought was the

most consistent ordering of the main set of functions and placed them in those two

functions. I then went through the source code, replacing one of more instances of the

main set of functions with these “shorthand” functions. In the 41 instances where these

functions were called, either singly or in groups, there were only 3 instances where

calling the “everything” function was detrimental to the operation of the parser.

In the other 38 instances, multiple trips were avoided while cleaning up the code at

the same time.

And Of Course, Some Cleanup¶

And what would a set of tasks be for me without some cleanup tasks? There really was not

anything too spectacular that I changed in these tasks, just some little things here

and there. I used my first list comprehension in the

__calculate_for_container_blocks function and I believe I have determined that I cannot

use any more due to the nature of the PyMarkdown project. It was not as hard as I thought

to create, but I still need to figure it out some more to really understand it. In the

HtmlHelper class, there were three functions that were using relatively big conditionals

to determine whether a character was valid for the given HTML construct. After some

research to confirm that I messed up the _ character and the - character in one of

the conditionals, I replaced all three with a simpler in clause.

As always, nothing too stellar, but a number of small items that made me feel better about the state of the project.

What Was My Experience So Far?¶

When I said in the Introduction section that I was stoked about this, I was not kidding. Two constants in my development career that have always kept me young and looking forward is that I love challenges and I love to learn. But instead of learning finely tuned concepts, I try and learn those concepts and the “meta” ideas behind them.

Take performance tuning on a program. To be honest, with few exceptions, the language that the tuning is being applied to and the program that the tuning is being applied to are not as important as the approach to tuning. And that is where performance tuning scares developer with faint hearts because of one glaring truth about performance tuning. There is a honeymoon period of tuning, where there are some low hanging “performance” fruit that are easy to solve. After that, the tuning is more miss than hit. From my experience, and the experience of others, once you are in that second phase, you must be prepared to fail way more often than succeed.

And that scares some people. I know it sometimes scares me. I like to be able to make a change and see that change. But performance tuning can be a lot of “nah, the readability suffers too much for such a small improvement” or “nah, that difference in performance is not significant”. And even for good performance trained developers, the percentage of “good” experiments often does not exceed 10% once past the honeymoon period.

That is where my experience comes in. I knew I was lucky to have such an obvious gift presented to me in terms of where the performance hit for the project was. I was also appreciative that previous rounds of performance improvements on prior projects allowed me to know an approximate name of the parameter that I wanted to look for. And I am also aware that the honeymoon period for finding the easy solutions was not going to last much longer.

But, due to my experience, I was okay with that. I knew I was off to a good start, and I now had good solid experience in the performance measuring cycle with Python. So, as I said in the Introduction section:

I was stoked!

What is Next?¶

With one foot into performance improvements, it seems like a good time to go all in and to try and improve the performance of the PyMarkdown project some more. Stay Tuned!

-

Yes, I am not doing any safety checking on this. I was doing a simple test to see if this would work. That kind of safety checking was added before I checked in the actual version of this class that made it into the repository. ↩

Comments

So what do you think? Did I miss something? Is any part unclear? Leave your comments below.